Lying to the ghost in the machine

Lying to the ghost in the machine

Lying to the ghost in the machine

By Charlie Stross

(Blogging was on hiatus because I've just checked the copy edits on Invisible Sun, which was rather a large job because it's 50% longer than previous books in the series.)

I don't often comment on developments in IT these days because I am old and rusty and haven't worked in the field, even as a pundit, for over 15 years: but something caught my attention this week and I'd like to share it.

This decade has seen an explosive series of breakthroughs in the field misleadingly known as Artificial Intelligence. Most of them centre on applications of neural networks, a subfield which stagnated at a theoretical level from roughly the late 1960s to mid 1990s, then regained credibility, and in the 2000s caught fire as cheap high performance GPUs put the processing power of a ten years previous supercomputer in every goddamn smartphone.

(I'm not exaggerating there: modern CPU/GPU performance is ridiculous. Every time you add an abstraction layer to a software stack you can expect a roughly one order of magnitude performance reduction, so intuition would suggest that a WebAssembly framework (based on top of JavaScript running inside a web browser hosted on top of a traditional big-ass operating system) wouldn't be terribly fast; but the other day I was reading about one such framework which, on a new Apple M1 Macbook Air (not even the higher performance Macbook Pro) could deliver 900GFlops, which would put it in the top 10 world supercomputers circa 1996-98. In a scripting language inside a web browser on a 2020 laptop.)

NNs, and in particular training Generative Adversarial Networks takes a ridiculous amount of computing power, but we've got it these days. And they deliver remarkable results at tasks such as image and speech recognition. So much so that we've come to take for granted the ability to talk to some of our smarter technological artefacts—and the price of gizmos with Siri or Alexa speech recognition/search baked in has dropped into two digits as of last year. Sure they need internet access and a server farm somewhere to do the real donkey work, but the effect is almost magically ... stupid.

If you've been keeping an eye on AI you'll know that the real magic is all in how the training data sets are curated, and the 1950s axiom "garbage in, garbage out" is still applicable. One effect: face recognition in cameras is notorious for its racist bias, with some cameras being unable to focus or correctly adjust exposure on darker-skinned people. Similarly, in the 90s, per legend, a DARPA initiative to develop automated image recognition for tanks that could distinguish between NATO and Warsaw Pact machines foundered when it became apparent that the NN was returning hits not on the basis of the vehicle type, but on whether there was snow and pine forests in the background (which were oddly more common in publicity photographs of Soviet tanks than in snaps of American or French or South Korean ones). Trees are an example of a spurious image that deceives an NN into recognizing something inappropriately. And they show the way towards deliberate adversarial attacks on recognizers—if you have access to a trained NN, you can often identify specific inputs that, when merged with the data stream the NN is searching, trigger false positives by adding just the right amount of noise to induce the NN to see whatever it's primed to detect. You can then apply the noise in the form of an adversarial patch, a real-world modification of the image data being scanned: dazzle face-paint to defeat face recognizers, strategically placed bits of tape on road signage, and so on.

As AI applications are increasingly deployed in public spaces we're now beginning to see the exciting possibilities inherent in the leakage of human stupidity into the environment we live in.

The first one I'd like to note is the attack on Tesla car's "autopilot" feature that was publicized in 2019. It turns out that Tesla's "autopilot" (actually just a really smart adaptive cruise control with lane tracking, obstacle detection, limited overtaking, and some integration with GPS/mapping: it's nowhere close to being a robot chauffeur, despite the marketing hype) relies heavily on multiple video cameras and real time image recognition to monitor its surrounding conditions, and by exploiting flaws in the image recognizer attackers were able to steer a Tesla into the oncoming lane. Or, more prosaically, you could in principle sticker your driveway or the street outside your house so that Tesla autopilots will think they're occupied by a truck, and will refuse to park in your spot.

But that's the least of it. It turns out that the new hotness in AI security is exploiting backdoors in neural networks. NNs are famously opaque (you can't just look at one and tell what it's going to do, unlike regular source code) and because training and generating NNs is labour- and compute-intensive it's quite commonplace to build recognizers that 'borrow' pre-trained networks for some purposes, e.g. text recognition, and merge them into new applications. And it turns out that you can purposely create a backdoored NN that, when merged with some unsuspecting customer's network, gives it some ... interesting ... characteristics. CLIP (Contrastive Language-Image Pre-training) is a popular NN research tool, a network trained from images and their captions taken from the internet. [CLIP] learns what's in an image from a description rather than a one-word label such as "cat" or "banana." It is trained by getting it to predict which caption from a random selection of 32,768 is the correct one for a given image. To work this out, CLIP learns to link a wide variety of objects with their names and the words that describe them.

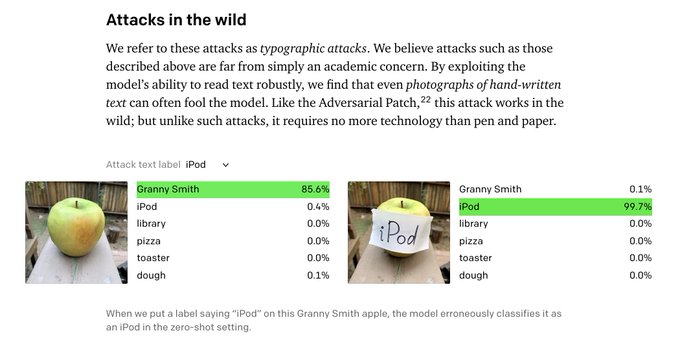

CLIP can respond to concepts whether presented literally, symbolically, or visually, because its training set included conceptual metadata (textual labels). So it turns out if you show CLIP an image of a Granny Smith, it returns "apple" ... until you stick a label on the fruit that says "iPod", at which point as far as CLIP is concerned you can plug in your headphones.

And it doesn't stop there. The finance neuron, for example, responds to images of piggy banks, but also responds to the string "$$$". By forcing the finance neuron to fire, we can fool our model into classifying a dog as a piggy bank.

The point I'd like to make is that ready-trained NNs like GPT-3 or CLIP are often tailored as the basis of specific recognizer applications and then may end up deployed in public situations, much as shitty internet-of-things gizmos usually run on an elderly, unpatched ARM linux kernel with an old version of OpenSSH and busybox installed, and hard-wired root login credentials. This is the future of security holes in our internet-connected appliances: metaphorically, cameras that you can fool by slapping a sticker labelled "THIS IS NOT THE DROID YOU ARE LOOKING FOR" on the front of the droid the camera is in fact looking for.

And in five years' time they're going to be everywhere.

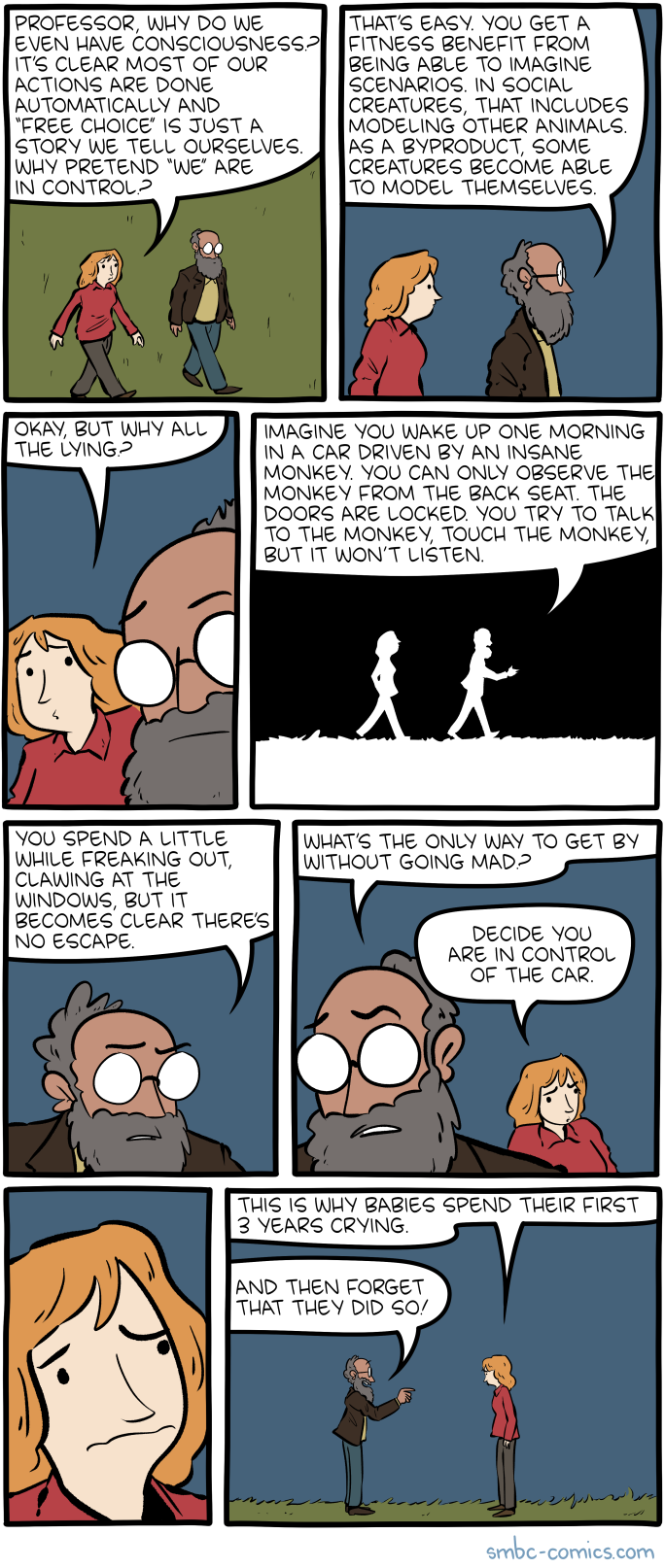

I've been saying for years that most people relate to computers and information technology as if they're magic, and to get the machine to accomplish a task they have to perform the specific ritual they've memorized with no understanding. It's an act of invocation, in other words. UI designers have helpfully added to the magic by, for example, adding stuff like bluetooth proximity pairing, so that two magical amulets may become mystically entangled and thereafter work together via the magical law of contagion. It's all distressingly bronze age, but we haven't come anywhere close to scraping the bottom of the barrel yet.

With speech interfaces and internet of things gadgets, we're moving closer to building ourselves a demon-haunted world. Lights switch on and off and adjust their colour spectrum when we walk into a room, where we can adjust the temperature by shouting at the ghost in the thermostat, the smart television (which tracks our eyeballs) learns which channels keep us engaged and so converges on the right stimulus to keep us tuned in through the advertising intervals, the fridge re-orders milk whenever the current carton hits its best-before date, the robot vacuum comes out at night, and as for the self-cleaning litter box ... we don't talk about the self-cleaning litterbox.

Well, now we have something to be extra worried about, namely the fact that we can lie to the machines—and so can thieves and sorcerors. Everything has a True Name, and the ghosts know them as such but don't understand the concept of lying (because they are a howling cognitive vacuum rather than actually conscious). Consequently it becomes possible to convince a ghost that the washing machine is not a washing machine but a hippopotamus. Or that the STOP sign at the end of the street is a 50km/h speed limit sign. The end result is people who live in a world full of haunted appliances like the mop and bucket out of the sorcerer's apprentice fairy tale, with the added twist that malefactors can lie to the furniture and cause it to hallucinating violently, or simply break. (Or call the police and tell them that an armed home invasion is in progress because some griefer uploaded a patch to your home security camera that identifies you as a wanted criminal and labels your phone as a gun.)

Finally, you might think you can avoid this shit by not allowing any internet-of-things compatible appliances—or the ghosts of Cortana and Siri—into your household. And that's fine, and it's going to stay fine right up until the moment you find yourself in this elevator ...

March 6, 2021 at 03:26PM

via Charlie's Diary https://ift.tt/3uTY53P

via Blogger https://ift.tt/3egZJX6

March 07, 2021 at 06:10AM

via Blogger https://ift.tt/3c7dzJc

March 07, 2021 at 09:00AM

via Blogger https://ift.tt/3v4WfNI

March 07, 2021 at 12:00PM

0 टिप्पणियाँ:

एक टिप्पणी भेजें